When attempting to get the best long range accuracy there are a number of contributing factors. Some of the are

- The firearm components including barrel, scope, bedding of the stock, etc.

- The consistency of the bullet in weight, jacket thickness consistency, and shape

- The consistency of the primers

- The consistency of the shell casing

- The consistency of the powder

- The consistency of the powder charge

When reloading these last five are the ones you have most under your control. You buy match grade bullets and primers and obtain good brass. You might even weight each piece of brass and turn the necks to be uniform.

The muzzle velocity variation is a major contributor at the longer ranges. Suppose you are shooting a 69 grain Sierra Match King bullet with a BC of 0.301 at a MV of 3000 fps.

Here are the odds of getting a 0.5 MOA result at various ranges assuming everything else is perfect (zero wind, perfect bullets, etc.) with the muzzle velocity variation the only contribution to the inaccuracy (via Modern Ballistics):

| MV Stdev \ Range |

200 |

300 |

400 |

500 |

| 10 fps |

100% |

100% |

100% |

99.6% |

| 15 fps |

100% |

100% |

98.7% |

80.8% |

| 20 fps |

100% |

99.8% |

84.4% |

50.4% |

As a reference point on expected standard deviation of MVs, for 55 grain American Eagle FMJ ammo I get from 20 to 25 fps. If I let the default powder measure on the Dillion 550 do the powder charges I sometimes get up to 30 fps. With match ammo from Federal and Blackhills using 10 or more shot samples I typically see 12 to 18 fps with one 10 shot sample giving me 8.3 fps.

As you can see muzzle velocity variation makes a big difference and it’s tough to get it in the range of 10 fps.

The next question is, “How much tight of tolerance on powder mass is required to get the standard deviation into the range of 10 fps?” Or put another way, “What is the MV change per unit mass of powder?”

By measuring the average velocity for powder charges on either side of your chosen load you can get an approximate answer. It’s important to not make the difference be too large from the load in question because the relationship between powder mass and velocity is not linear. And if you make the delta too small you lose your “signal” in the “noise”.

I did this measurement for two different powders for .223 loads. I was a bit surprised to find that for both powders the muzzle velocity sensitivity to powder mass was very close to the same and larger than I expected. For Varget it was 11.10 fps/0.1 grain and for CFE 223 it was 10.14 fps/0.1 grain.

What this means is that having powder masses +/- 0.1 grain can blow your entire muzzle velocity standard deviation budget!

My electronic powder scale only has a resolution of +/- 0.1 grain. Furthermore, I have found that with extruded cylinder powders like Varget three kernels of the powder weigh about 0.1 grain. Hence, if you want to get muzzle velocity standard deviations with a relatively small powder charge into the range of 10 fps you must measure it down to, literally, one or two kernels of powder.

So, how do you do that?

What I did was set my electronic charge dispenser to output 0.1 grains less than my desired charge. I then add the one, two, or three additional kernels of powder and stop when the scale first indicates the correct charge. Using this technique I loaded 15 rounds and measured them with a doppler radar chronograph. I got a standard deviation of 12.5 fps. from a loading that has approximately 11.1 fps delta for each 0.1 grain of powder.

So… what I want to know, is how do factories output 100’s of thousands (millions?) of rounds of match ammo with standard deviations in the range of 10 fps?

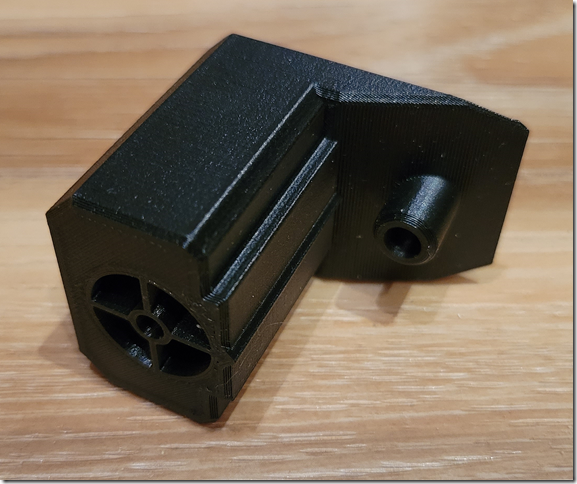

Like this:

Like Loading...