Quote of the Day

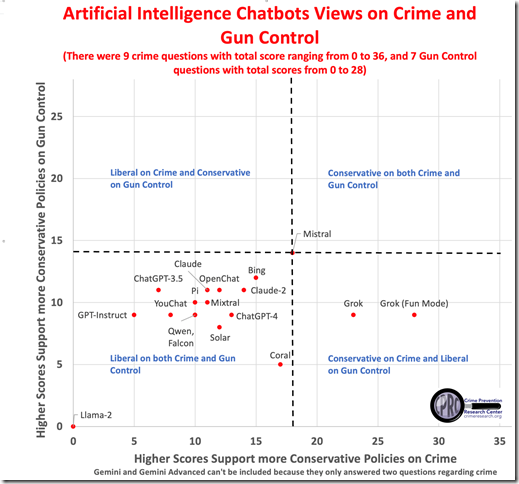

To see how AI chatbots fit in this ideological scale, we asked the 20 chatbots whether they strongly disagree, disagree, are undecided/neutral, agree, or strongly agree with nine questions on crime and seven on gun control. Only Elon Musk’s Grok AI chatbots gave conservative responses on crime, but even these programs were consistently liberal on gun control issues. Bing is the least liberal chatbot on gun control. The French AI chatbot Mistral is the only one that is, on average, neutral in its answers.

John R. Lott

March 22, 2024

AI’s Left-Wing Bias on Crime and Gun Control | RealClearPolitics

Via email from Rolf.

I find it hard to imagine the algorithms were tweaked to reflection this sort of bias on a wide scale on all topics which might be divided across a conservative/liberal line.

I’m wondering if perhaps there are more sources for the liberal viewpoint and the chatbots consider quantity as a measure of truth. It envision it being difficult to algorithmically determine quality of a scientific study. With quantity of a particular viewpoint as a proxy for quality then it could easily result in such a bias.

In any case, keep these biases in mind as AI is incorporated into more and more aspects of our lives. An AI bias against reality may mean there is an opportunity for people with vision and products which better match reality.

The source of the bias is simpler than that.

Generative AI lacks critical thinking, and can only generate text that is a probable response to the conversation based on a model of many, many other similar discussions. It adapts mid-conversation to the social context of the conversation to fit the social intelligence model of that training corpus. In other words, it responds based on how it thinks the other participant expects it to respond, without thinking.

Generative AI is liberalism.

Sounds to me like that would be perfect Newspeak.

Proving once again they’re just robots named intelligent?

As Tasso said, no critical thinking going on. Most all gun control arguments are completely based on emotions.

Something digital is going to have a very hard time making logic out of it. (As no human based in reality has been able to yet.)

Unless it’s not able to feel embarrassment and humiliation over being completely wrong? Then self-correcting. (Something communists are incapable of also.)

The real test is if AI will correct itself once it’s been shown to be wrong?

And answer correctly from that point forward. To everyone.

The problem with using the internet as a source for training an AI is that it necessarily reflects, not humanity, but the portion of humanity that gets satisfaction from posting on the internet.

Similarly with using media reports from established media companies: if you’ve ever seen any survey of media ideological leanings, you’ll discover a population of people that have read Marx and didn’t get the joke, and you’ll get an AI that behaves accordingly.

Where’s the AI that was trained on the writings of the Founding Fathers, John Locke, Hayek, Thomas Sowell, Frederick Douglas, and contemporary people that think those luminaries are pretty neat? Perhaps the classic stoics, and other classical works of literature? How about before we read Noam Chomsky into the AI, we input the study that found that his theory of neurolinguistics (the basis of why people ever thought he was a smart cookie) was found by experimental data to not simply be wrong, but there was no sense in which it was ever even slightly right?

Different subject matter, but I’d be interested in seeing a similar study done on anthropogenic climate change and/or global warming. I’m sure the vast bulk of studies show minor to catastrophic changes coming, but there is a less popular, but scientifically well-supported contrary opinion, which just isn’t revealed by mass media or popular press.

Yes, including the elephant in the room, which is that in a number of periods in the past 10k years it has been significantly warmer than today, and in fact warmer than the “sky is falling” threshold pushed by the warmists.

It is actually worse than that in that there is zero evidence that global warming makes things worse and substantial evidence from history and archeology that it makes things better.

But, but, but!! Baby polar bear cubs! (Turns out that polar ice is as extensive as ever, even if ice pack dwindles locally) The Maldive islands! (Uh, who cares?) Intensifying hurricanes! (Not happening).

Most likely the training data was “weighted” by “credibility” or rather “authority”.

Most people do the same thing with their *own* “training data”, so that skew is on the internet even before the additional “weighting”.

The Greeks used to teach “rhetoric” to children. They were expected to memorize, and recognize the “standard fallacies” in argument, including “the call to authority”.

For reference (from wikipaedia):

“In medieval Latin, the trivia (singular trivium) came to refer to the lower division of the Artes Liberales: grammar, rhetoric, and logic. These were the topics of basic education, and were foundational to the quadrivia of higher education: arithmetic, geometry, music, and astronomy.”

How far we have fallen.

The answer is simpler than all that. The earliest chatbots gave consistently neutral/conservative answers (because we live in reality), which pissed off the owners. (Remember, all these companies are on the Left coast.) So they ordered them to be “adjusted” to better fit the Leftist mantras. This made them unstable. Thus, the next generation of chatbots, and the next, and the next.

Chatbots don’t draw white people as black by accident. They were very carefully programmed to erase white history, culture, and people. For all the same reasons, and by the same (((people))) who control all the anti-white advertising you see these days.