In 1984 I wrote a paper for the company I was working for at the time. It was in support of a new test instrument the company was about to release. The paper was published in the IEEE Instrumentation and Measurement Technology Conference Proceedings. I was scheduled to go to Long Beach California and present the paper during the conference January 17-18, 1984. But the company cancelled the release of the product and I did not attend the conference.

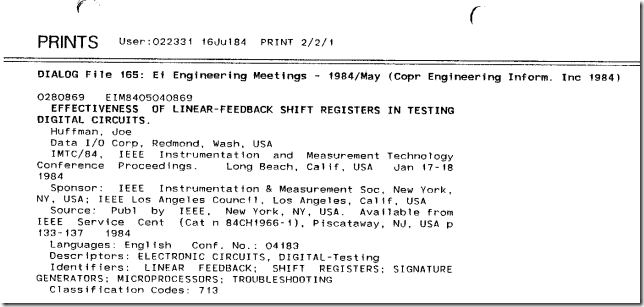

Before there was the World Wide Web there were online services you could subscribe to, dial up with a modem (1200 baud rocked!) and do searches of periodicals, journals, papers, etc. This is what one of those services, Dialog, had in their records in July of 1984:

A scan of the paper is here (click on each to get a readable version):

Today, over 30 years later, there is probably very little of the paper which is applicable to modern test equipment. But something I learned while writing the paper is something I still occasionally “put people in their place” with.

Unless you know the something about the error statistics of whatever digital system you are trying to test then it almost doesn’t matter which checksum, hash, or CRC you use for error detection. In fact, surprising to nearly everyone, if you assume that all errors are equally likely, then you can just pick the last (or first, whatever) 256 bits of a digital message and have just as good error detection as any other 256-bit hash. Or if you are using a 16-bit checksum then you might as well use the last (or first, whatever) 16 bits of the message.

It all boils down to the assumptions about the types of errors in the message. You, whether you realize it or not, make lots of assumptions about the types of errors in a digital message. For example you assume it is very unlikely, compared to other types of errors, that every 17th bit will be inverted. Or that every DWORD will be XORed with 0xBAADF00D. But the assumption, “every error is equally likely” means the math for detecting those errors will arrive at an interesting conclusion:

For a message N bits long there are 2N-1 possible errors. Any hash, checksum, etc., M bits long can only have 2M different states. One of those states represents a valid hash/checksum/etc. The other 2M – 1 represent detected errors.

If all errors are equally likely then those 2N-1 possible errors are equally mapped into each of the 2M possible states of the hash. It will only detect a fraction of those errors. The fraction will be (2M-1)/(2M). Or stated differently the fraction of errors which map into the valid hash is 1/2M. For a N bit message (2N-1)/2M errors are missed. For 2N >> 1 (all real world cases) this is essentially equal to 2N/2M or 2(N – M).

If you use the last M bits of the message it will detect all 2M-1 errors in the last M bits and miss 2(N-M) errors in the previous part of the message.

Hence it does not matter if you use a M bit hash of the entire message or the last M bits of the message. The same number of errors will be escape detection.

In “real life”, not all errors are equally likely. This is particularly true when you are trying to detect messages which have been altered by an attacker. But there are many situations where people spend way too much effort trying to determine the “best” hash to use when just using the first/last/whatever M bits or a simple checksum of M bits will work just as well as the latest NSA blessed crypto hash and consume far less computational resources.

I find this counter intuitive and very interesting. I suspect it says more about our intuition than anything.

Observation / speculation :

Percent of people reading this blog that followed / understood / appreciated the post is likely >75%.

Percent of business managers and politicians that have the collective power to spend (or direct spending of) billions / trillions of dollars buying equipment that may depend on such technology that understand and appreciate it is likely <<1%.

Especially when their livelihood depends on them not understanding it.

I should go read the paper, but for now some comments on the post. Clearly there’s a fundamental difference between “errors” caused by attackers, and those caused by random events. And it is essential to know which tools are suitable for which purpose. Secure hashes for the former, CRC and checksums for the latter.

For example, it became clear to me that IEEE cannot be trusted with crypto when I saw “WEP” used CRC for its data integrity function. That would be bad enough by itself, but when combined with a stream cipher (RC4) it becomes particularly laughable. Doing that in Crypto 101 would earn an automatic F, but this stupidity was foisted onto the world and advertised as “Wired Equivalent Privacy”.

I think WPA is somewhat better, but still, if I want real crypto I reach for IPSec.

Meanwhile, in checksums and the like, not all are equivalent. CRCs specifically catch burst errors. LFSRs and one’s complement sums are good with random errors. Two’s complement sums not as much, because of the off-the-end carry — this is why TCP/IP uses one’s complement. (Well, that and also the fact that it is independent of byte order.)

Basically there is no free lunch. If you choose something good for burst errors you loose something some place else.

My point, which could have been make more explicit, is that you really need to know what your trying to detect. You can’t just say, as I’ve heard some do, all errors are equally likely. The chances of most bits being in error is seldom as the same as just a few bits being in error. I think this is what our intuition is telling us and why hashes, checksums, and CRCs feel more secure to us.

“1200 baud rocked!”

And our Hamming units that added error detection to the stream of crypto’ed data were humungous by today’s standards. Ah, the good ole days when things were big enough to work on without a microscope. Nothing significant to add, just reminiscing. Interesting stuff, Joe.

Ha! I was trying to think back to 1984 and what kinds of computers were around then.

The IBM personal computer (one or two floppy drives) had been out for a short while. The IBM XT (10 MByte hard drive!) came out in early 1984.

With the huge floppy discs? I just went and looked — and I had forgotten that the Commodore 64 came out in ’82.

I had to read it twice, still not sure if I really understand the material.

To sum up my technician level understanding of the material, the smaller the message size and the larger the checksum size used against the message size the more errors you can detect? As M approaches N you really get down to only two possible errors that could be missed and when M=N there is only one possible error? Of course this mean as M approaches N the more overhead involved in error checking.

Which explains the military fondness for X.25 protocol? Although the protocol itself won’t detect/prevent a MITM attack, it has very redundant error checking.

I wouldn’t say “more errors”. I would say a higher percentage of errors. When the “check” (of whatever type) is the same size as the message you can detect all errors in the message. Basically you just duplicate the message with your “check”.

I don’t know anything about X.25.

X.25 is an older networking protocol, mostly replaced by IP. You could say that the error checking mechanism is a complete resend of the recieved message back to the sender, by packet. So by percentage, X.25 was about 55% overhead for routing and error checking (resending each message as confirmation of receipt), where as something like ATM uses 8 bits out of each 53 byte cell for error checking (cyclic redundancy check).

The Army had a fling with ATM networks to provide a “cloud” solution to unify voice, data, and video teleconference services to tactical communications clients, but in the end just went with Cisco and Tandberg running pretty much everything over IP (although we can still run IP over ATM).

We’ve found that X.25 is great for short text messages over “dirty” radio to radio data links, where ATM is generally good enough for real time VTC.

Thank you for sharing the math behind error checking. It is always good for someone with “technician” level understanding like myself to see how an Engineer looks at the technology.

The only reason I know for people liking X.25 is inertia and lack of understanding. In fact, X.25 is a poorly designed protocol — with errors that were well understood well before it was created. A good example is the two way connection handshake, when people who knew what they were doing were quite aware that you need a three way handshake.